I hate Dataflex http://en.wikipedia.org/wiki/DataFlex with a passion!

Well, when I say that I hate Dataflex I should say I hate the so called "console" mode Dataflex, the only one I've ever worked on. And I have to also say that part of my hate is due to a wrong training, or better, no training at all.

How did I ever meet Dataflex (df31d)? Well, you know, the rent is a good motivation to work with tools you don't like very much.

Since the beginning the language itself appeared awkward to me. Coming from some real languages (C, Perl, Java), I felt not at home with a language case-insensitive.

The total lack of braces and the return to the BEGIN-END syntax was quite a shock.

No multi-line comments.

A compiler that crashed each time you had a line longer than 256 characters...and no, I'm not joking! I don't remember how many hours I spent trying to understand why a program was not compiling at all, without any error message, to just discover that somewhere I had a quite complex IF clause (indented) that has exceed the right margin. And I have to say that I often laugth thinking at this stupid bug, probably implemented in a way I only have seen in didactic examples of C such as:

#define MAX_LINE_SIZE 256

...

char current_line[ MAX_LINE_SIZE ];

However, I started writing my programs, and as usual with a new language, my first developments were baby steps in the Dataflex world. My programs were simple, written in a simple and well documented way, so that they looked even more stupid to me.

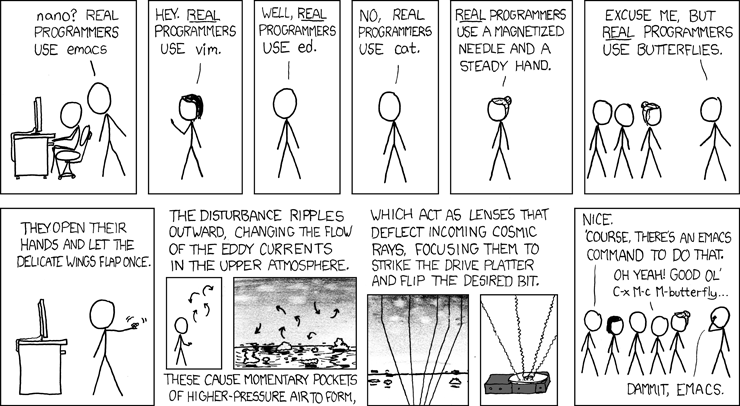

There was not an IDE to develop in Dataflex, so I fired up my Emacs to the aim. But it was not a simple task, since Dataflex was displaying masks on the screen using the DOS character set, that at that time was not shipped with Emacs. I had therefore to compile the appropriate encoding, add to Emacs, and configure the editor to load such encoding for every Dataflex file (.frm). At that time I was at the very basic of Emacs, and so it was quite an hard job to me.

As I said, I was coming from some experience with real languages, and if you can pass over the syntax and the buggy compiler, you cannot live without methods. Well, my Dataflex was without methods. I had two choice: define a "routine", invoked via a far jump (GOSUB) or use labels to far jump to other pieces of the programs (GOTO).

The operator set was...tiny. Moreover, many operators were verbatim, so that comparisons use GT,GE,LE,LT and so on.

Assignment was performed via a MOVE...TO command. If my memory serves me well, the only "smart" arithmetic operators were INCREMENT and DECREMENT.

To complete the nightmare, I did not have any kind of good documentation (and I was not able to find out any on the Web).

But you have the opportunity to define "macros", in the C language sense. Ok, this sounds good, until you clash some variable or loop name.

Last but not least, the compiler was reporting errors at lines with macro expanded. In other words, while the compiler was reporting an error on line X, your error could be a lot before due to some macro expansion.

After a while I was working with all this mess, I found the special DEBUG command. The purpose was to print out to the screen at which line the program was executing. But it was not very helpful, since it was just printing out a number (like 123) on the screen exactly where the cursor was, so filling your application of digits making me feeling I was looking at the Matrix screen.

Next I discovered the -v switch on the compiler, and I found it could be increased at least to -vvv to get more verbose messages. Or better, the messages were obscure as usual, but the processed file (with macro expanded) was printed on the screen, so that you can find out the line number with more accuracy.

Then came methods. Yeah! A great day, one that made me feel a bit more at home.

Well, methods in Dataflex are not what you would expect from other languages. The prototype is extremely verbose, the invocation reminds me to lisp since you have to put the method name in parentheses (as well as arguments):

(foo(1, 2, 3))

But hey, at least you have some real reusable code without the name clashing of a macro and with a return value!

The special key handling was a pure mess! Dataflex used subroutines to handle events generated by special keys, with some confusion on what, when, and how to resume the control flow.

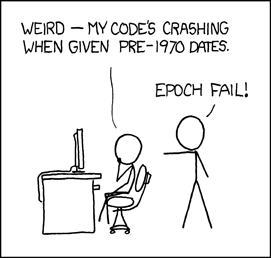

The database structure was...not a database structure, at least in my opinion.

If my memory serves me well, you had to define a new archive (kind of ISAM) using a specific program, that ensured that the data file and the indexes (also separated files) where in place. The fresh archive was then added to the so called filelist, that was in charge of listing all available databases (it was a kind of schema to a RDBMS). Modifying an archive (e.g., adding a field) was of course a locking operation, so you had to schedule for maintanance. And being the filelist limited in size, you had a limited number of tables/archives in your deployment.

One way to overtake the number of archive limitations was to play with user paths: as happens with the concept of search schema in RDBMS, an user can have several copy of the same archive, with the same binary structure and different content, in different disk positions. Pointing to one or another would do the trick.

We used this in particular to scatter a few utility archives among users, so that every one could have its own copy.

Relying on the file system, data corruption was dumpered by the operating system and its own file system. Using Linux, luckily, there were no many corruptions, but ship happens, and so you had to run a specific tool to reindex the whole archive. Of course, this was another full-locking operation.

In general, the speed of data retrieval was good, but the approach was that of single record (opposed to the one of whole set), and therefore all programs contained long and nested loops to extract the information you need. The relational part of the query (e.g., join) was all in charge of the developer, and therefore missing a single attribute could destroy all your retrieve logic in a subtle way.

Ok, so there were BEGIN-END, loops, GOTO/GOSUB and locking operations...but the system workded. And it worked up to a few gigabytes of data, therefore I have to say I was quite impressed about.

Of course, you did not have the flexibility of SQL, and you did not have even a way to specify a query that was not "pre-built". Allow me to elaborate a bit more: as I said, you had to define indexes for every archive. An index defines how the archive can be read, that is in which order you can loop thru the records. What if you want to retrieve the record in another order? You have to either define a new index (but you are limited by number of indexes and locking operations) or to use existing ones in an esotheric way, making your loops even more complex and your program a lot less readable.

Last but no least: an index was not only an access method, but a way to define an unique constraint. Therefore, you were locked to only a few indexes for every archive, and the rest was a huge REPL.

There was also the catch-all looping mechanism: the sequential scan of the whole archive (also known as BY RECNUM).

Adding records was quite simple, a special instruction SAVERECORD was there for the aim.

Modifying a record was a little more complex, since you had to lock via the REREAD command, modify fields and then issue a SAVERECORD followed by an UNLOCK.

Perl came to a rescue.

At that time I was mainly using shell scripting, but here I needed something a little more complex to handle all the mess left around by Dataflex. For instance, I used a Perl script to convert and mangle output text before sending it to the printer. Dataflex was absolutely not good at handling text!

I also used Perl to control how the users jumped into the Dataflex runtime, and this allowed us to ease the management of the sessions when locking operations were absolutely necessary.

Finally, I used Perl to mangle some Dataflex source code in order to avoid some boring stuff.

I have to say that, in order to automate some looping, Dataflex provided WINDOWINDEX and FIELDINDEX, two special (global) varaibles to iterate over UI and database fields. Please note that the above variables were global, so a wrong initialization could make you fly to the wrong record or field!

Now, after all this mess, I have to say that I'm aware of a lot of good uses of Dataflex, that has also a kind of OOP interface. As I told, I had to work on a lot of legacy code, and without documentation and appropriate training, it was quite impossible for me to use "advanced" features.

As final word, please note that Dataflex was a quite old language, therefore it is obvious that when compared to modern languages it looks scaring and awkward.

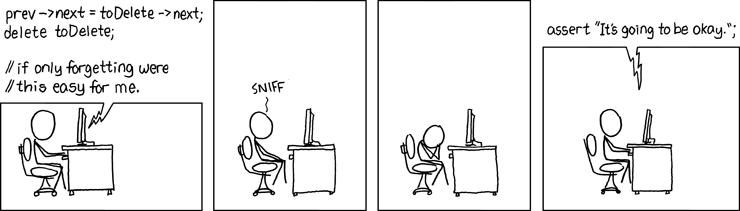

But sometimes I still have nightmares about Dataflex!